RESEARCH

Perform innovative research today to tackle the challenges of tomorrow.

GereKa [09/25 - 05/26]

Benutzerfreundliche 3D Gebäuderekonstruktion zur Bestandsaufnahme mittels Kamera

Projektträger Jülich - Energie und Klima – Energiesystem: Nutzung – Energieeffizienz in Gebäuden (ESN 1)

- 3D semantic segmentation of buildings using BIM (Building Information Modeling)

- Development of user-friendly smartphone/tablet app

DIGI-PV [07/23 - 06/26]

Digitale Planung und automatisierte Produktion von Gebäude-integrierter Photovoltaik, Teilvorhaben: Erfassung, Digitalisierung, Klassifizierung und Strukturierung von Gebäudeoberflächen

Research project funded by the Bundesministerium für Wirtschaft und Klimaschutz.

GreenAutoML4FAS [03/23 - 02/26]

Automatisiertes Green-ML anhand des Anwendungsbeispiels Fahrerassistenzsysteme - Automated Green-ML with Application to Driver Assistance Systems

Research project funded by the Bundesministerium für Umwelt, Naturschutz, nukleare Sicherheit und Verbraucherschutz.

VISCODA collaborates with AI (Institut für Künstliche Intelligenz), TNT (Institut für Informationsverarbeitung) , and IMS (Institut für Mikroelektronische Systeme).

EVCS Dataset:

Publications:

- L. Chen, S. Südbeck, C. Riggers, T. Geib, K. Cordes, H. Broszio:

"EVCS: A Benchmark for Fine-Grained Electric Vehicle Charging Station Detection", GCPR 2025, accepted - K. Cordes, L. Chen, S. Südbeck, H. Broszio: "Online Optimization of Stereo Camera Calibration Accuracy", 3D in Science & Applications (3D-iSA), 978-3-942709-34-7, p. 75-78, 2024, Preprint (pdf)

InFusion [06/20 - 09/23]

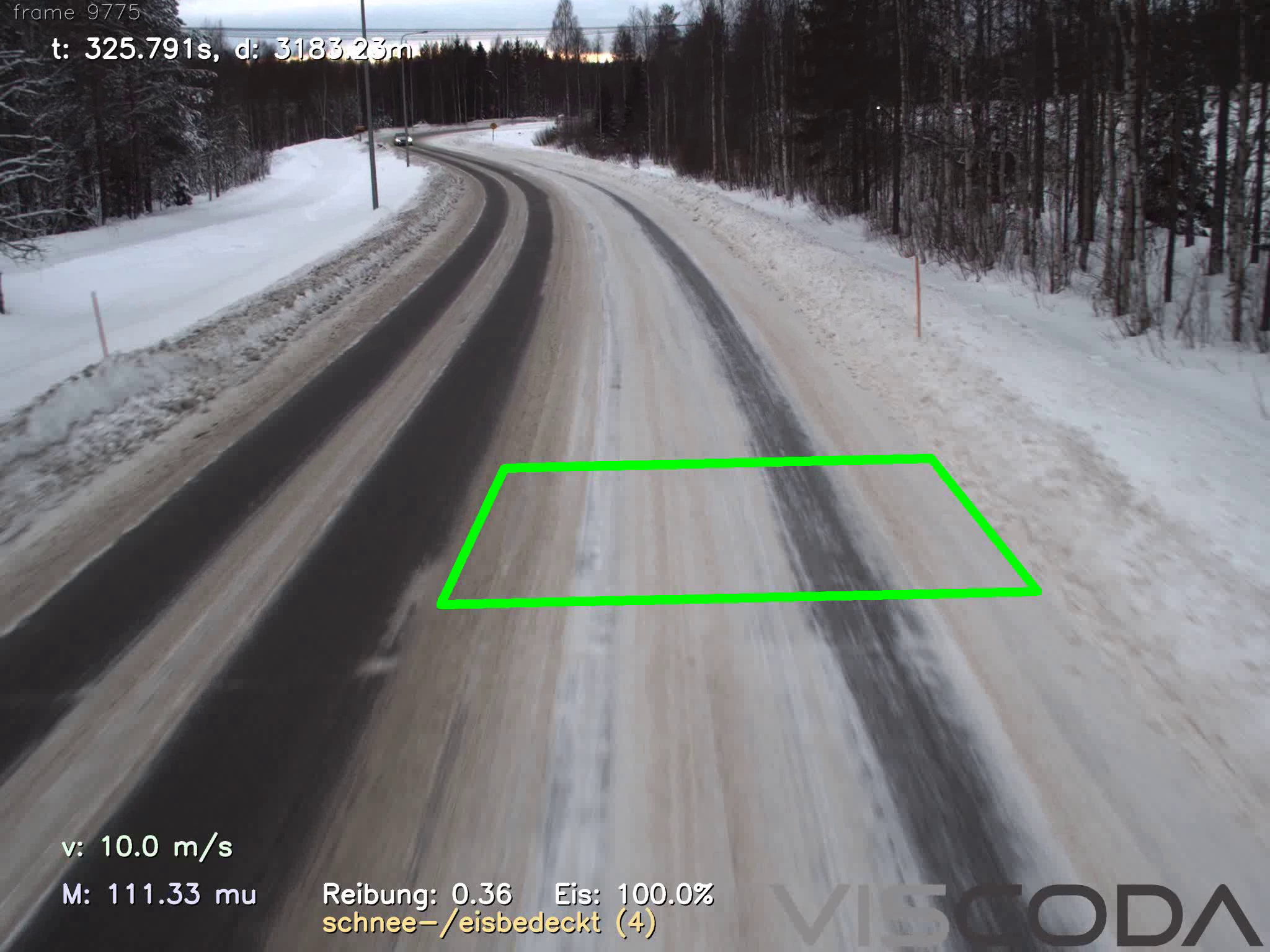

Cloud-Anwendung für zeitlich veränderliche Fahrbahnzustandsinformationen für verschiedene Fahrzeugklassen basierend auf fusionierten Fahrzeugdaten von Fahrzeugen verschiedener Klassen (LKW/PKW) – Cloud application for time-varying road condition information for different vehicle classes based on fused vehicle data from vehicles of different classes

Detection, localization, and classification of road conditions in real-time from the in-vehicle front camera. Estimation of vehicle specific road friction by fusion with information from other sensors (such as vibroacoustic, meteorologic, or traction control responses). A cloud based service increases traffic safety and efficiency for driving assistance and automated driving applications.

- Press release (German)

- Overview (German)

- Final demonstration: 16.01.24, 10h – 16h, Erich-Reinecke-Testbahn Contistraße 1, 29323 Wietze

Publications:

- K. Cordes and H. Broszio: "Camera-Based Road Snow Coverage Estimation", ICCV Workshop: roBustness and Reliability of Autonomous Vehicles in the Open-world (BRAVO), 2023, paper at CVF, paper at ieeexplore

- K. Cordes, C. Reinders, P. Hindricks, J. Lammers, B. Rosenhahn, H. Broszio: "RoadSaW: A Large-Scale Dataset for Camera-Based Road Surface and Wetness Estimation", CVPR Workshop on Autonomous Driving, 2022, paper at CVF, paper at ieeexplore

- Final Report: "InFusion - Cloud-Anwendung für zeitlich veränderliche Fahrbahnzustandsinformationen für verschiedene Fahrzeugklassen", 2023, doi: 10.2314/KXP:1912380390

IWAn [01/21 - 06/23]

Interaktive Werkzeuge zur automatisierten 2D/3D-Annotation – Interactive Tools for Automated 2D/3D Annotation

Development of interactive methods based on computer vision and machine learning to ease and fasten the annotation (e.g. labeling) of vision data.

The objective is to generate highly accurate annotation with minimal user interaction. The annotated data serves as ground truth for machine learning approaches targeting automated driving applications.

KaBa [05/21 - 03/23]

Kamerabasierte Bewegungsanalyse aller Verkehrsteilnehmer für automatisiertes Fahren – Camera-based Motion Analysis of Road Users for Automated Driving

This project addresses motion estimation of road users, e.g., cars and pedestrians, using monocular image sequences. To achieve that, panoptic segmentation is applied to each camera image to extract object instances of road users. Then, 3D information (position, orientation, and shape) of detected road users is estimated. Detected object instances are associated across frames by fusing their spatial consistency in 2D and 3D. The result is the motion of all detected road users, which is fundamental for the planning phase of automated driving.

Dieses Projekt wurde mit Mitteln des Europäischen Fonds für regionale Entwicklung gefördert. – This project was partially funded by the European Fund for Regional Development.

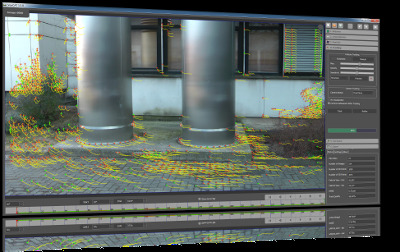

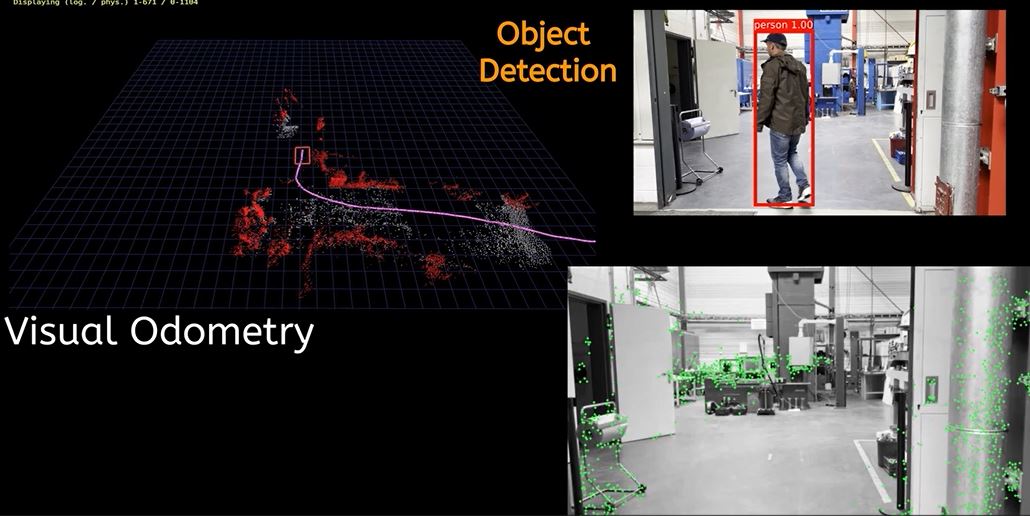

Scene Reconstruction from Video [ongoing]

For the products VooCAT/CineCAT, various algorithms for camera calibration, tracking, structure from motion, and video segmentation are developed. The basis for the scene estimation is the usage of corresponding image features which arise from a 3D structure being mapped to different camera image planes. By using a statistical error model which describes the errors in the position of the detected feature points, a Maximum Likelihood estimator can be formulated that simultaneously estimates the camera parameters and the 3D positions of the image features.

The camera path, the video segmentation, and the reconstructed scene are essential for the integration virtual objects into the video. The point cloud is used for 3D measurements, such as distances to or between different objects of the scene.

Published Videos [YouTube]:

- Real-time visual odometrie & reconstruction

- Example augmentation results video

- 3D reconstruction from monocular video

- Tutorial video

Publications:

- K. Cordes, H. Broszio: "Accuracy Evaluation and Improvement of the Calibration of Stereo Vision Datasets", ECCV - VCAD workshop, 2024, Preprint (pdf)

- K. Cordes, H. Broszio: "Micro Maneuvers: Obstacle Detection for Standing Vehicles using Monocular Camera", IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), pp. 3861-3866, 2022

VooCAT

Real-time Visual Odometry

MicroManeuvers

Patents

- "Method for detecting curbs in the vehicle environment", ("Verfahren zur Erfassung von im Fahrzeugumfeld befindlichen Bordsteinen"), Maciej Korzec, Hellward Broszio, Matthias Narroschke, Nikolaus Meine, DE102016215840A1, 2018-03-01

- "Method of inherent shadow recognition", ("Verfahren zur Eigenschattenerkennung"), Daniel Liebehenschel, Maciej Korzec, Hellward Broszio, Kai Cordes, Carolin Last, DE102016216462A1, 2018-03-01

- "Method for continuous estimation of driving surface plane of motor vehicle" ("Verfahren und Vorrichtung zur Schätzung einer Fahrbahnebene und zur Klassifikation von 3D-Punkten"), Andreas Haja, Hellward Broszio, Nikolaus Meine, DE102011118171A1, 2013-05-16

- "Method for determining e.g. wall in rear area of passenger car during parking" ("Verfahren zur Bestimmung von Objekten in einer Umgebung eines Fahrzeugs"), Andreas Haja, Hellward Broszio, Nikolaus Meine, DE102011113099A1, 2013-03-14

- "Method for determining angle between towing vehicle and trailer" ("Verfahren und Vorrichtung zur Bestimmung eines Winkels zwischen einem Zugfahrzeug und einem daran gekoppelten Anhänger"), Andreas Haja, Hellward Broszio, Nikolaus Meine, DE102011113197A1, 2013-03-14

- "Method for detecting obstacle in car surroundings" ("Verfahren und Vorrichtung zur Detektion mindestens eines Hindernisses in einem Fahrzeugumfeld"), Hellward Broszio, Andreas Haja, Nikolaus Meine, DE102010009620A1, 2011-09-01

IdenT [02/20 - 04/23]

Identifikation dynamik- und sicherheitsrelevanter Trailerzustände für automatisiert fahrende Lastkraftwagen – Identification of dynamic and safety-relevant trailer conditions for automated trucks

The dynamic properties of a truck are determined to a large extent by its trailer. Yet the trailer is barely taken into account in current truck sensor systems. The goal of IdenT is the development of an intelligent trailer sensor network and a cloud-based data platform for reliable real-time estimation of the state of trailer components crucial for automated driving applications. Using a backward-facing camera VISCODA provides its project partners with highly accurate visual odometry and a geometrically reconstructed scene for sensor fusion on-board and in the cloud. Through a combination of rule-based (geometric) and data-driven (machine learning) algorithms, VISCODA additionally detects and localizes road users behind the rear of the trailer.

RaSar [01/18 - 12/20]

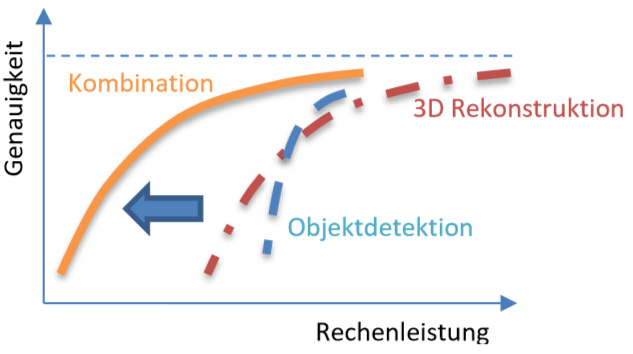

Ressourcenadaptive Szenenanalyse und -rekonstruktion – Resource-adaptive scene analysis and reconstruction

Research project in cooperation with the Institut für Informationsverarbeitung (Leibniz Universität Hannover / LUH) targeting optimal 3D scene reconstruction accuracy and object detection reliability with resource limitations such as hardware specific computation power.Dieses Projekt wurde mit Mitteln des Europäischen Fonds für regionale Entwicklung gefördert. – This project was partially funded by the European Fund for Regional Development.

5GCAR [06/17 - 07/19]

Cooperative and connected V2X applications drive further improvements of advanced driver assistance systems (ADAS) and automated driving. For the final demonstrations in the 5GCAR project, three use case classes are selected and representatives for each class are identified. The classes are Cooperative Maneuver, Cooperative Perception, and Cooperative Safety.

VISCODA developed Multiple Object Tracking (MOT) for the use case Lane Merge Coordination.

Additionally, Long-Range Sensor Sharing, See-through, and Network-assisted Vulnerable Road User (VRU) Protection are showcased. The final demonstration took place on the UTAC test track near Paris.

5GCAR is a H2020 5G PPP Phase 2 project funded by the European Commission.

Project Website:

Project Flyer:

Published Videos:

- 5GCAR final demonstration

- 5GCAR pre-demonstration (demo video for MWC 2019)

- Explanation of 5GCAR use cases (demo video for MWC 2018)

Publications:

- K. Cordes, H. Broszio, H Wymeersch, S Saur, F Wen, Nil Garcia, Hyowon Kim: "Radio‐Based Positioning and Video‐Based Positioning",

https://doi.org/10.1002/9781119692676.ch8, Wiley, April 2021

Book Chapter at ieeexplore - K. Cordes and H. Broszio: "Vehicle Lane Merge Visual Benchmark",

International Conference on Pattern Recognition (ICPR), IEEE, pp. 715-722, 2021

Supplementary Video, Poster, Benchmark,

Paper at ieeexplore - K. Antonakoglou, N. Brahmi, T. Abbas, A.E. Fernandez Barciela, M. Boban, K. Cordes, M. Fallgren, L. Gallo, A. Kousaridas, Z. Li, T. Mahmoodi, E. Ström, W. Sun, T. Svensson, G. Vivier, J. Alonso-Zarate:"On the Needs and Requirements Arising from Connected and Automated Driving", J. Sens. Actuator Netw. 2020, 9(2):24.

OpenAccess: abstract, html, pdf - K. Cordes, N.Nolte, N. Meine, and H. Broszio: "Accuracy Evaluation of Camera-based Vehicle Localization", International Conference on Connected Vehicles and Expo (ICCVE), IEEE, pp. 1-7, Nov. 2019

Paper at ieeexplore - "The 5GCAR Demonstrations", Sep. 2019

Deliverable D5.2 (pdf) - B. Cellarius, K. Cordes, T. Frye, S. Saur, J. Otterbach, M. Lefebvre, F. Gardes, J. Tiphène, M. Fallgren: "Use Case Representations of Connected and Automated Driving", European Conference on Networks and Communications (EuCNC), June 2019

Extended abstract (pdf), Poster (pdf) - K. Cordes and H. Broszio: "Constrained Multi Camera Calibration for Lane Merge Observation" International Conference on Computer Vision Theory and Applications (VISAPP), SciTePress, pp. 529-536, Feb. 2019

Paper preprint (pdf), Poster (pdf) - "5GCAR Demonstration Guidelines", May 2018, Deliverable D5.1

- M. Fallgren, M. Dillinger, A. Servel, Z. Li, B. Villeforceix, T. Abbas, N. Brahmi, P. Cuer, T. Svensson, F. Sanchez, J. Alonso-Zarate, T. Mahmoodi, G. Vivier, M. Narroschke: "On the Fifth Generation Communication Automotive Research and Innovation Project 5GCAR - The Vehicular 5G PPP Phase 2 Project",

European Conference on Networks and Communications (EuCNC), June 2017

Extended abstract (pdf)

Object Motion Estimation [ongoing]

For the estimation of motion models of moving objects in video, a motion segmentation technique is utilized. Motion segmentation is the task of classifying the feature trajectories in an image sequence to different motions. Hypergraph based approaches use a specific graph to incorporate higher order similarities for the estimation of motion clusters. They follow the concept of hypothesis generation and validation.

Our approach uses a simple but effective model for incorporating motion-coherent affinities. The hypotheses generated from the resulting hypergraph lead to a significant decrease of the segmentation error.

Recent Publications:

- K. Cordes, C. Ray’onaldo, H. Broszio: "Motion-Coherent Affinities for Hypergraph Based Motion Segmentation",

International Conference on Computer Analysis of Images and Patterns (CAIP), Springer LNCS, pp. 121-132, August 2017